In the past few years, two documentary works have used AI to reproduce the voices of dead male celebrities—Anthony Bourdain and Andy Warhol—raising a series of technical, ethical and aesthetic questions about the relationship between the human voice as a unique sonic identifier of an individual person and the role of appropriation and invention in non-fictional media. Resituating the writing and training of a voice-based AI within the history of appropriation within art practice, this project will make public the process of writing and training an AI to an audience of artists, scholars and members of the public in order to investigate how AI and machine learning destabilizes the association between voice, authenticity and identity.

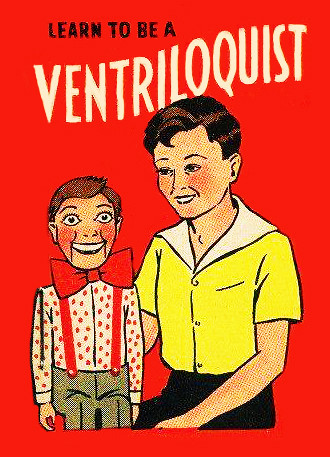

As a practice-based project, the researchers will train an AI to speak in their voices. The aim is to open up technical, ethical and aesthetic questions relating to the process of machinic appropriation (or theft) that will take hold in three co-related domains: (1) in terms of machine learning where training (which is usually foreclosed by the resulting full-functioning and fully trained AI) is framed as ventriloquising the researchers’ own voices, (2) in terms of software development where machine learning source code is duplicated, replicated, and re-written from various open sources (a common practice in software development), and (3) in terms of artistic practice, as mentioned above, where questions of authorship, authenticity, and identity are addressed, staged, and contextualised.

The focus on voice and sound are central to the project. Many AI related artworks tend to focus on text and image, which leaves a yet unexplored space to experiment with novel art forms conjugating voice and machine learning. While AI voice appropriation has commonly been framed in an hyperrealist key as “deepfake,” this project seeks to approach speech and voice appropriation in terms of an artistic practice where “fake” (deep or not) becomes a modality of creative experimentation and artistic production. This is the reason the two researchers will use their own voices to train their AI and will foreground the very process of training a “fake” as part of one of the project’s outputs.

The project will directly address the ethics of coding the human voice, specifically from questions of source coding an AI system to questions of artificial expressions and ventriloquy. Because appropriation, as conceptualized in this project, is not only a concern in the arts (the topic has a wealth of historical precedents, including various works from Warhol), but furthermore is posited as a core condition of machine learning and software development, the project is set to advance an understanding of the algorithmic condition of contemporary artistic and cultural production using AI. Conceptualized as “machine theft,” an exploration of this very condition will be staged as part of the second instalment of the project.

David Gauthier and Anna Poletti

This project is supported by the Special Interested Group, AI in Cultural Inquiry and Art, within the Human-Centred AI Research Cluster at Utrecht University.